Thinking like humans

But before we spend too much time worrying over these negative scenarios (or getting all revved up for the more optimistic ones), it may be well to take the whole Singularity premise with a grain of salt, and certainly to question how or if it can take the place of human intelligence.

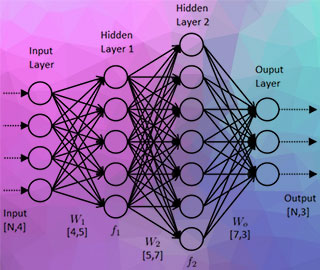

Unfortunately, even to begin to understand aspects of the latest AI work like quantum computing and neural networks, one needs among other things to understand advanced math, which is part of what most of us think is not worth learning, since our extended minds can calculate the tip for us.

Presumably if humans do continue to expand on artificial intelligence, a day when machines can think something like humans or in a smarter different way might come (if that is something we think is worth pursuing), but to base this date on Moore's Law would certainly seem to be hasty. For one thing, there are regularly reports that Moore's law is not going to hold. In 2013, Intel's former chief architect Bob Colwell offered evidence that led him to predict that Moore's law would be dead within 10 years (Hruska 2013). And reports continue to come out suggesting that things have already slowed down enough to suggest it is no longer valid. Moore himself said that this growth couldn't continue forever. The predictions change daily. Google "Moore's law" + the current year and you will probably get a range of opinions and forecasts. However, "quantum computing" or some other new human discovery may come to the rescue, of course.

More to the point, however, seems to be the questionability of the very assumption everyone is making that increase in computational processing speed and power = human intelligence. Certain kinds of intelligence, perhaps, but not necessarily human intelligence. (Possibly these AIs may have forms of intelligence that humans could learn to recognize as better than human intelligence! We can learn to play chess better from the novel solutions and strategies AIs have come up with, for instance.)

Can a machine think like a human being? And what purpose would such a machine serve?

Your laptop presumably has immensely more processing power and speed than the first PC you ever used. Certainly even your smartphone has an exponential multiplication of the power and speed of the Commodore 64, which came out in 1982. But is your smartphone really any "smarter" than the Commodore 64? Indeed, are either of them really smart in any way that seems like human intelligence? I don't tend to think so.

Why are so many people focused on recreating human intelligence anyway? Most likely the superintelligent machines or bio-machines of the future will not think like humans, assuming they can be said to "think" at all. And that might be for the best.

There may in fact be a number of conditions necessary for human thought that have little to do with logical and mathematical processing power and speed. Reason (rational thought) is already more than just math and logic. Fantasy and sci-fi author (and armchair philosopher) R. Scott Bakker believes that his "Blind Brain Theory" leads one to the conclusion that reason (logical thinking) "is embodied as well, something physical" (Bakker 2014) : no reasoning without meat, in other words. How will organically embodied thinkers like humans be able to relate to computers, with their radically different "embodiment"?

In order for the machines to think "like humans," or even to think in ways that we humans could usefully relate to (or feel threatened by), many people postulate that the machines will have to have certain explicitly human conditions which - so far, for the most part - computers do not. Among these could be:

- Consciousness, whatever that means, and self-consciousness (more on that in a moment)

- Embodiment, senses, a central nervous system

- Needs, desires, fears

- Other emotions (like insecurity, lust, joy, hope, and anxiety)

- A social milieu, with physical and communal connections to others

- Development over time, life learning, and history (this perhaps is the easiest to create or simulate)

- Random processes; the ability to make mistakes and learn (again, current AI might be doing something like this)

Herve-Victor Fomen summarized some of the things that may keep AI systems as they are currently conceived of from developing human emotions, and thus human-like "intelligence" (thanks to Camilo Buitrago for drawing my attention to this blog post):

They have no body, no hormones, no memory of their interaction with the world and have not gone through the process of learning life. They have no emotional memory equivalent to that of Man, with its construction starting in childhood, carrying on with the learning of life in adolescence and adulthood. (Fomen 2018)

These are things that actually power human intelligence - and what make it worth having at all; and perhaps no machine could have these sorts of circumstances fully unless it had been engineered to be so similar to the "human machine" as to be indistinguishable from it in every way, essentially an engineered human animal, with hormones, cell growth and death, appetites, needs, animal emotions, and all the rest.

Current AI is much better at some kinds of human intelligence than others. In one of publisher Wiley's often ironically funny-titled series of computer books, Artificial Intelligence for Dummies (2018), John Paul Mueller describes seven different types of "intelligence" that humans may possess: Visual-spatial, Bodily-kinesthetic, Creative, Interpersonal, Intrapersonal, Linguistic, and Logical-mathematical.

As we might expect, 2018's AI got the highest "Simulation Potential" score (possibility of emulating humans) in "logical-mathematical" (where it often has more, better, or much quicker intelligence), and completely bombed on Creative Intelligence and Intrapersonal Intelligence (introspection, reflection), also not showing much promise in Interpersonal Intelligence and Linguistic Intelligence (as John Searle suggested with the Chinese Room. though ChatGPT has proven to appear to be a good communicator). Mueller devotes the final chapter of the book to "Ten Ways in Which AI Has Failed" (in its present or at least recent forms), and these are precisely the aspects of being human in which we might expect it not to deliver: understanding the world, developing and discovering new ideas, and empathy for others. Artificial Intelligence does not have consciousness (yet, at least).

A key question here is what exactly is consciousness; and can an intelligent machine experience it? In a brief interview with Neil deGrasse Tyson from November 2022 (in which a clearly skeptical Tyson actually gets almost a little hostile at times), Ray Kurzweil suggests that AI will be interfaced with our meat brains in the 2030s, and assumes when we have more intelligence we will have more consciousness. To me, it seems worth considering the distinction between intelligence and consciousness that Yuval Noah Harari explores in a video posted the same day (!) called "The Politics of Consciousness." Harari argues that there has been amazing progress in computer intelligence over the last 50 years, but "exactly zero advance in computer consciousness." He discusses what consciousness is from the unusual, but perhaps compelling, premise that "Consciousness is the only thing in the universe that involves suffering." Because a computer cannot suffer, it can not be said to have consciousness. (He is therefore actually more interested in how other animals may be said to have consciousness than the idea that computers will, because he believes that what is important - politically and morally - is to reduce suffering.)

Some people argue that leaving aside emotions or maybe even consciousness, it would be crucial for artificial intelligence to have ethics to be human, as most humans also have a moral sense in one form or another, and we would like to find this in whoever or whatever has power over us. Left out of Mueller's list was ethical intelligence; but maybe it is a crucial aspect of being "human" (or should be). Flynn Colman (2019) argues that we need to start working on building ethics into artificial intelligence right now, if we don't want the superintelligences to be "monsters" (not so much immoral monsters; but rather amoral monsters, monsters without moral values of any kind; just a drive to learn and get smarter, period).

One of the main difficulties of creating ethical AI is the problems humans already have with our own ethical intelligence. Janelle Shane is a researcher and popularizer who was looking at (often quite amusing) AI "fails" in the late 2010s (the AI that was available then). What she generally found is that AI fails are our fails, or rather fails in the data we ask the AI to work with and the way we articulate what we want it to do.

Attempt by an early AI image making network to create an original picture of a cat, as seen in Janelle Shane's Ted Talk

An early AI tried to build a picture of a cat from its analysis of countless pictures of cats online and basically created a meme with garbled text, because so many cats online are in memes with funny captions - in other words, because AIs are dealing with media representations rather than unmediated experience of reality, of which AIs so far have little experience. In other words, what AI knows about is human hyperreality, not reality itself. Similarly but less amusingly, AI is asked to automate a hiring process and builds in sexism due to the hiring practices implicit in the sample resumes of previous hires it was provided to learn from, and so forth. (Watch Shane's entertaining Ted Talk or one of her other YouTube videos for the ways AI often solves problems without having a full human emotional, social, and ethical understanding of context.)

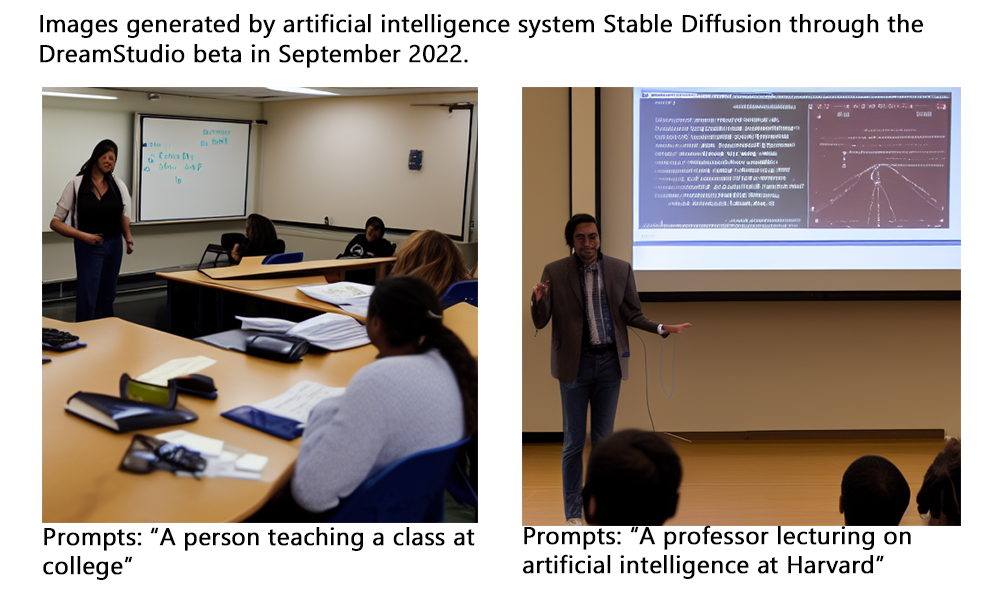

In 2022 AIs suddenly got much better at generating photorealistic and artistic images; but it's not obvious that they have also been taught, or learned themselves, how to be just and unbiased with what they generate, in human terms (the main players have put in various "moral" blocks so that, for instance, ordinary users don't use the software just to make deepfake porn or violence-promoting media such as some fringe DarkFic). However, if you look at image-generating AI, the current results in 2022 seemed hopeful, indeed almost "affirmative action" from my limited experiments:

Colman believes that something much more elaborate than Asimov's Three Laws of Robotics (instructions meant to prevent robots from harming humans physically) needs to be built into AI if it not to become evil. He cites physician Paul Farmer's premise that “the idea that some lives matter less is the root of all that is wrong with the world” (quoted in Colman 2019). So perhaps his (dubious or question-begging, but morally admirable) premise that all lives are equally important should be built into any ethical AI. Shane and others have drawn attention to how racism and sexism (and other forms of inequity) have been found to have been unconsciously built into a lot of the algorithms and computer programming we currently have (see, for example, Safiya Umoja Noble's 2018 Algorithms of Oppression: How Search Engines Reinforce Racism for some of the alarming research on this). As most speculators have recognized, if AI is evil rather than good, it will probably be because we have accidentally programmed our own evil, rather than our own good, into it.

On the other hand, since we can see these problems, presumably the algorithms can be endlessly fixed, tweaked and made fairer and less biased - at least more reliable than the average human being. And the AI imaging experiment above - with its first choice for representing professors being people of colour (though still able-bodied etc) - seems to suggest that they're working on that. The philosopher Bertrand Russell once said "Man is more moral than God," and it's definitely possible that AI will be more moral than Man. In his 2018 book 21 Lessons for the 21st Century, Yuval Noah Harari, who I generally find to be a progressive and free-thinking yet ethically driven voice on these topics, asked us to imagine a perfected self-driving car of the future. It would almost always make better decisions than human drivers (who are full of animal emotions and get confused in situations where there are snap ethical decisions to be made on the road), and thus many human lives would be saved that are lost now. Feeling comparatively optimistic about AI at that time, he continued:

The same logic is true not just of driving, but of many other situations. Take for example job applications. In the twenty-first century, the decision whether to hire somebody for a job will increasingly be made by algorithms. We cannot rely on the machine to set the relevant ethical standards – humans will still need to do that. But once we decide on an ethical standard in the job market – that it is wrong to discriminate against black people or against women, for example – we can rely on machines to implement and maintain this standard better than humans. (Harari 2018)

It might be precisely the lack of the things that make human thinking "human" that will make the AI of the future fairer and more reasonable than even the most impartial, logical, and ethical human judge can be. Perhaps, as Harari sometimes seemed to fantasize, we will turn to algorithms not just for movie or music recommendations, but for the soundest advice about difficult decisions and life choices. No one will smoke, everyone with a penis will wear a condom, no one will go to school for years in a field they are not really cut out for, and the world will become a paradise of more sensible decisions at last! I'm not entirely being sarcastic here.