The Chinese Room and Moore's Law

Ultimately, Turing's test was only intended to establish whether a machine could imitate the behaviour of a human well enough to seem human. Passing the test does not ultimately mean that the machine has achieved humanhood, or even that it really "thinks" like a human being does. This kind of artificial intelligence, where the computer can seem to be thinking like a human though it isn't really, has been commonly referred to as Weak AI. Strong AI, on the other hand, was proposed as a way of describing an artificial intelligence that actually does think exactly like a human being.

In 1980, the philosopher John Searle developed a thought experiment which, he argued, discredited the idea of Strong AI in a machine. He called it The Chinese Room.

The Chinese Room

This thought experiment asks you to imagine a program that gives a computer the ability to carry on an intelligent conversation in written Chinese. So this would be a computer that can pass the Turing Test in Chinese. The Chinese judge cannot tell if the Chinese replies coming from the locked room are those of a real Chinese person or a computer. Assuming the computer can convince someone that it is a Chinese-speaking human, does the computer "know Chinese" in the same way a human being who speaks the language knows it?

Searle asks you to imagine now that this computer program is translated into a monstrously complicated set of printed plain-language English rules that are given to a human person who only speaks English, and that this person is put inside the same locked room the computer was in, with copies of all the Chinese characters he needs and with this instruction book that plots out the machine instructions the computer would have done so that a human can do them. In theory the human should be able to follow the instructions to carry on an "intelligent conversation" in Chinese, just like the computer does. "If you see these squiggles, reply with these squiggles," and so forth. (Sorry about Searle's use of the inexact term "Chinese," though perhaps it is more acceptable when referring to the written language(s); also for his referring to the ideograms as "squiggles" - his point was that most Americans don't read Chinese and the characters just look like meaningless squiggles to them.)

We imagine that the human being has an infinite amount of time to carry out the comparisons and instructions (which he or she would certainly need to have, unlike a computer).

If the instructions are good enough, the people outside the room will be convinced that the person inside the room understands Chinese. In fact, however, there is no such understanding, and to the person inside the room the symbols being exchanged are merely meaningless squiggles. Searle argued that the same would be true for any computer that appears to "think" like a human being. The computer in the Chinese room doesn't "know Chinese" any more than the English-speaking person who follows the same instructions does. There is some sort of understanding in a real human who knows Chinese that cannot be reduced to a series of instructions; this is how Searle saw it.

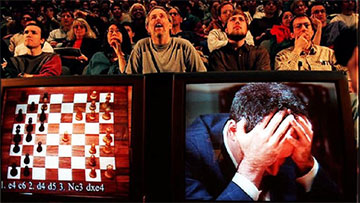

When the IBM supercomputer "Deep Blue" (designed for the sole purpose of beating the best human chess players) finally won against the world champion Garry Kasparov in 1997, Searle rushed to argue (against the assumption implicit in Turing's views about chess) that this did not mean that Big Blue had human intelligence:

The fact that Deep Blue can go through a series of electrical impulses that we can interpret as 'beating the world champion at chess' is no more significant for human chess playing than it would be significant for human football playing if we built a steel robot which could carry the ball in a way that made it impossible for the robot to be tackled by human beings. The Deep Blue chess player is as irrelevant to human concerns as is the Deep Blue running back. (Lipking)

Garry Kasparov becomes the fist human chess champion to lose to a computer.

When, in 2011, a language-recognition and database program named "Watson" beat the best human players in the game show Jeopardy, Searle was again quick to point out that Watson didn't even know that it had won anything, once more arguing against successful symbolic interaction and data analysis/retrieval as a sufficient demonstration of actual intelligence (Vardi).

Computers continue to beat humans at our toughest games. In March 2016, AlphaGo, created by Google's London-based AI department DeepMind, beat human master Lee Sedol at the ancient Chinese game of Go, considered by many to be a harder game even than chess.

In order to beat Kasparov, Deep Blue had actually had a team of human chess programmers re-programming it every night to help it have a better chance at beating the chess master the next day. So Kasparov's agony in letting down the human side, as seen in the 2003 documentary Game Over, is partly unfounded - a team of humans helped Deep Blue win. Watson and AlphaGo, on the other hand, are actually "learning" as they go along, without constant help from the human programmers. Are these machines therefore "more intelligent" than humans, at least at their specific games? It would appear so.

I can't keep up with the latest in AI chess and competitive gaming, but as of 2018, Yuval Harari was suggesting that the AI system AlphaZero had been able to "learn to play chess" and then win at it in four hours!

On 7 December 2017 a critical milestone was reached, not when a computer defeated a human at chess – that’s old news – but when Google’s AlphaZero program defeated the Stockfish 8 program. Stockfish 8 was the world’s computer chess champion for 2016. It had access to centuries of accumulated human experience in chess, as well as to decades of computer experience. It was able to calculate 70 million chess positions per second. In contrast, AlphaZero performed only 80,000 such calculations per second, and its human creators never taught it any chess strategies – not even standard openings. Rather, AlphaZero used the latest machine-learning principles to self-learn chess by playing against itself. Nevertheless, out of a hundred games the novice AlphaZero played against Stockfish, AlphaZero won twenty-eight and tied seventy-two. It didn’t lose even once. Since AlphaZero learned nothing from any human, many of its winning moves and strategies seemed unconventional to human eyes. They may well be considered creative, if not downright genius. (Harari 2018, p. 31)

Maybe competitive games are not the single best indicator of human intelligence, though?

Searle and others like him want to believe that there is something missing from any computer's processing that would also be missing from the performance of the human who doesn't know Chinese but can follow the rules to assemble an adequate Chinese-character response in the locked room.

Various people talk about this missing something as consciousness, understanding, or mind.

People still argue about whether or not a sufficiently complex AI would develop these seemingly very human things. Some people, like Harari, assume that it's kind of irrelevant. Who needs consciousness when you can "learn" to beat the world chess champion in four hours? From the point of view of anyone who views intelligence in this way, human consciousness could well actually seem like an obstacle to increased intelligence ...

There was a bit of "encouraging" news in 2023 when American amateur Go player Kellin Pelrine beat a Go-playing AI in 14 out of 15 games ("Go Humans!"), though Pelrine did so "by taking advantage of a previously unknown flaw that had been identified by another computer" (Waters 2023). If people are actually concerned about improving human intelligence, maybe the best response to the creative play of AlphaZero and the discovery that allowed Pelrine to win is not to feel despair because an AI can beat a human at chess or Go, but to learn from the creative new strategies that AI comes up with. This assumes that people want to play Chess or Go for the fun and intellectual stimulation of a competitive game, and not just because they want the symbolic badge of being "the smartest." Artificial Intelligence will likely be bringing many more novel ways of seeing things and solving things, and this may be one of the ways in which it truly improves (rather than just taking the place of) our own intelligence.

Moore's Law

Theorists like Turing seemed to assume that the human brain is basically a machine - an elaborate thinking machine; which means that it would simply be a matter of increasing the complexity of the artificial brain (through an increase in the density and efficiency of the microchips) until thought (or even consciousness) emerges from the system.

Those who believe in the computational nature of human thought see slow but certain progress towards artificial replication of what human brains do. This view was summed up by the co-founder of Google, Larry Page:

My theory is that, if you look at your programming, your DNA, it's about 600 megabytes compressed, so it's smaller than any modern operating system, smaller than Linux or Windows… and that includes booting up your brain, by definition. So your program algorithms probably aren't that complicated; [intelligence] is probably more about overall computation. (quoted in Carr)

If this is the case, then we should be able to estimate the moment when the artificial computer brain becomes as complex as the human brain. And that is what the prophets of post-human intelligence like Ray Kurzweil - at whose assumptions we will take another look in the final lesson - have been doing. According to many of them, this will likely happen in your lifetime (assuming the planet and the human race survive the climate crisis, global fascism, and whatever other unforeseen perils arise, of course).

Intel founder and computer scientist Gordon Moore made a prediction in 1965, based on the rate at which the density of transistors on computer chips had been increasing. Moore's Law, as it came to be known, postulated that transistor density doubles every two years, leading to exponential growth of processing power. By analyzing the actual rate of growth and comparing it to what Moore's law would predict over the last half century, people have often suggested that Moore's law has proven to be remarkably accurate (Poeter). The assumption is that if Moore's Law holds, we can calculate almost to the exact year when we will have built a mechanical brain to rival our own. In the early 21st century, most speculators were assuming it would be within a hundred years, and possibly within the first 50 years of this century. Again, we'll take a second look at this possibility in the last lesson of this course.